Managing Oncology Biospecimen Data: Best Practices for Accuracy and Integrity

Sep 5, 2025

By Carolina Bermudez, Data Management Coordinator, Reference Medicine

In oncology research, the integrity of biospecimens depends not only on how they are collected and preserved but also on the accuracy and completeness of the data that accompanies them. Even the most carefully preserved tissue or blood sample can lose its scientific value if the associated metadata is incomplete, inconsistent, or incorrect.

For organizations involved in oncology biospecimen procurement, managing clinical and demographic data effectively is as critical as managing the specimens themselves. Managing this data requires clear protocols, rigorous validation, and strong collaboration with collection sites—often across countries and varying levels of infrastructure.

Defining Essential Biospecimen Data Elements

The specific data required can vary from one research project to another, but there are common elements that form the backbone of specimen integrity and usability.

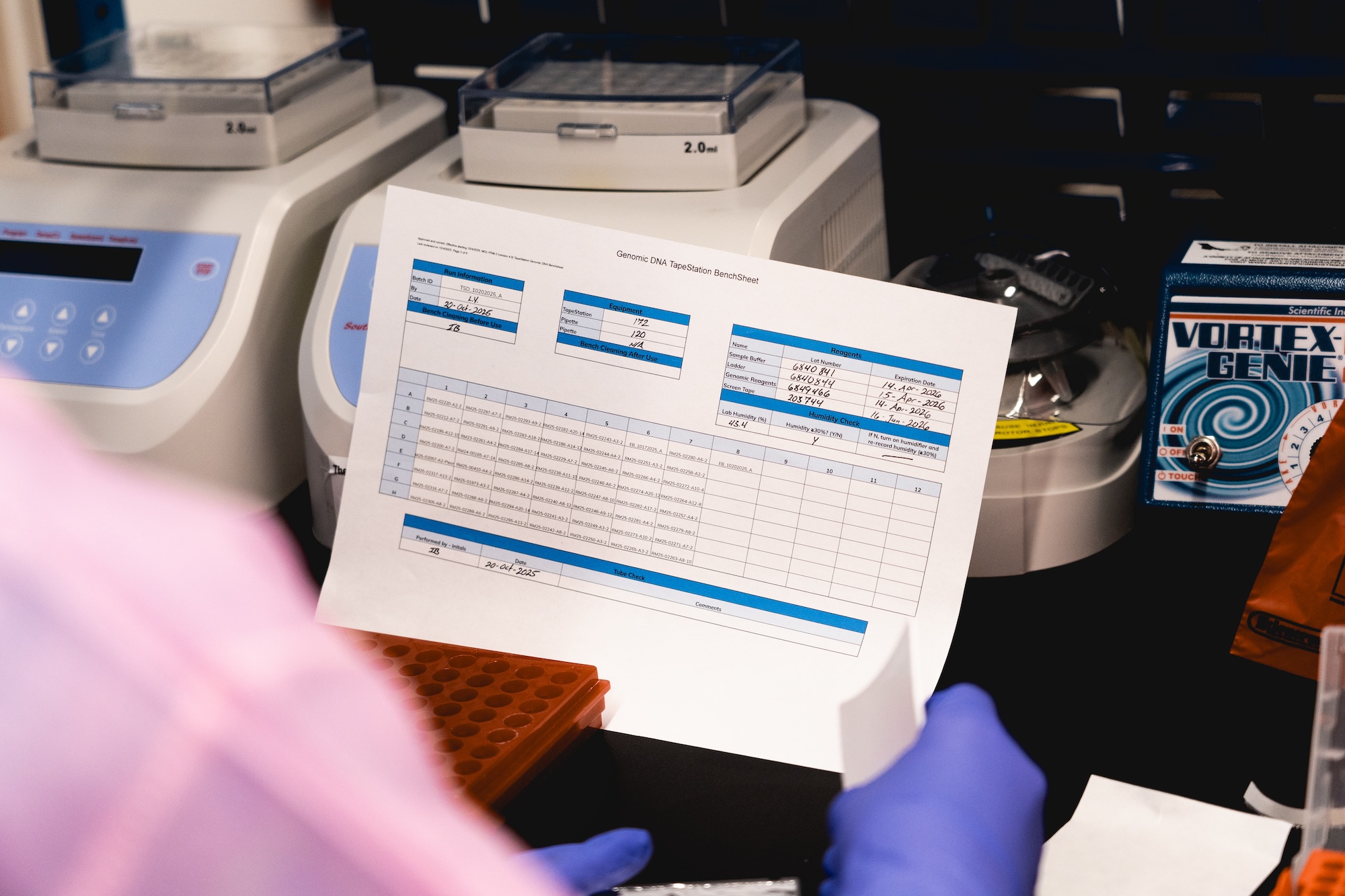

From a quality control perspective, technical measurements such as fixation times, DNA concentration thresholds, and DNA fragmentation values are vital. For example, blood samples must often be processed within two hours of collection to maintain viability, while tissue specimens should not exceed 72 hours in formalin to prevent degradation of key tissue structures and critical biological analytes.

Additionally, clinical and demographic information adds context essential for downstream analysis. Key fields include patient age, race, treatment status, surgical procedure type, clinical and pathology diagnoses, TNM values, grade, stage, and ICD codes. Together, these details help ensure that oncology specimens meet study inclusion criteria and that research outcomes are valid.

Establishing Clear Requirements with Biospecimen Collection Partners

Consistency starts with transparency. Successful human biospecimen procurement teams provide collection sites with clear documentation outlining required data fields and definitions before any specimens are collected. This documentation should be paired with a feasibility discussion to confirm that the site can meet the requirements.

The final work order—detailing cohort size, inclusion/exclusion criteria, processing protocols, and timelines—serves as the reference point throughout the project. When sites understand both the “what” and the “why” behind each requirement, compliance rates increase and the quality of data improves.

Building and Maintaining Collaborative Partnerships with Biospecimen Collection Sites

Data quality is rarely improved through one-off instructions; it is the product of sustained, respectful collaboration. Regular communication with collection sites helps reinforce standards and provides opportunities to address challenges early in the process. Requests should be framed as cooperative problem-solving rather than rigid demands. When trust is established, collection sites are more likely to respond quickly to missing data queries and to proactively flag potential issues.

Training and Continuous Improvement

Effective training for collection site personnel includes sharing standard operating procedures (SOPs), providing study-specific protocols, and walking through work orders in detail. This upfront investment helps align expectations and builds site capacity to deliver consistent, high-quality data over time.

Continuous improvement comes from regular feedback loops—reviewing submitted data, identifying recurring issues, and offering practical guidance on how to resolve them.

Validating and Cleaning Biospecimen Data

Data validation is most effective when it combines automated tools with manual review. Parsing tools, such as those that extract structured data from pathology reports, can speed up the initial review. By cross-referencing parsed fields with manifests—checking age, sex, date of collection, diagnosis, and surgical procedure—discrepancies can be quickly identified.

When inconsistencies arise, specimens should be placed on hold until clarification is received. Corrected reports should replace any erroneous records to maintain data integrity. This prevents errors from moving downstream into research workflows.

Common Oncology Biopecimen Data Challenges

Several issues recur in oncology biospecimen data management:

- Translation errors in non-English pathology reports, which can distort clinical descriptions or diagnoses

- Mismatches between pathology diagnoses and microscopic descriptions

- Missing fields in manifests, often due to site-specific differences in recordkeeping

Addressing these requires both technical processes—such as discrepancy flags in the LIMS—and relationship-driven follow-up with site contacts.

Working Across Varied Data Infrastructure

Not all collection sites have the same level of digital maturity or data literacy. A centralizing process that can accept data in multiple formats, then normalize it into a consistent template, is essential. While most sites may use Excel, the organization and formatting often differ.

Having a standard internal format for accessioning into the LIMS ensures that all specimens, regardless of origin, meet the same data quality standards before they are made available to researchers.

The Importance of Data Cleanliness

Clean data accelerates research by eliminating the delays caused by resolving discrepancies. Poor data cleanliness can result in mislabeling, incorrect diagnoses, or mismatched donor IDs—issues that can undermine entire studies.

In high-stakes research, where patient-derived samples are scarce and expensive to procure, ensuring data accuracy is not optional; it is foundational to scientific reliability.

Geographic and Logistical Considerations for Global Biospecimen Procurement

Global procurement networks must account for geopolitical and logistical realities. For example, even in regions affected by conflict, such as Ukraine, large biorepositories have maintained stable collection operations. The primary disruption has been occasional shipping delays, which can often be mitigated by rescheduling within a short window.

Proper preservation methods, such as formalin fixation and paraffin embedding for tissue blocks, allow specimens to withstand these delays without loss of integrity—provided that quality parameters were met before shipping.

Opportunities for Process Improvements

Even with limited resources, targeted investments can yield significant efficiency gains. One such valuable improvement is enabling batch uploads of pathology reports directly into individual case records within the LIMS. This could reduce manual steps and give both data managers and histology reviewers immediate access to original documentation, streamlining the review process.

Automation tools—whether through LIMS enhancements or spreadsheet-based AI functions—can also play a role in identifying irregular characters, trimming excess whitespace, and flagging anomalies. While these technologies do not replace human oversight, they augment the ability to catch and correct errors quickly.

A Framework for Reliable Biospecimen Data Management

The following principles emerge as best practices for managing oncology biospecimen data:

- Define essential data fields based on both quality control and clinical context needs.

- Document requirements clearly and confirm feasibility with collection sites before projects begin.

- Build collaborative relationships that encourage open communication and problem-solving.

- Invest in training and SOP alignment to improve data consistency at the source.

- Use both automated and manual validation to detect discrepancies before specimens enter research workflows.

- Normalize data from diverse sources into a standard internal format for accession.

- Address logistical risks proactively through preservation protocols and flexible scheduling.

- Seek process improvements that reduce manual steps and increase data accessibility.

By integrating these practices into daily operations, biospecimen procurement organizations can provide researchers with specimens that are not only physically intact but also backed by complete, accurate, and reliable data. This effort ensures that valuable samples contribute meaningfully to advancing cancer research rather than being compromised by preventable data issues.